One of the most energizing practices I’ve seen is the rise of prompt-a-thons; collaborative events where students and faculty work together to craft, refine, and evaluate prompts for generative AI. Think hackathon meets digital literacy! The sessions are low-tech, high impact by reminding us we are deeply human. AI is only as good as the questions we ask. Prompt-a-thons build confidence, foster teamwork, and teach students how to be the “human in the loop. But here’s the thing: AI isn’t neutral.

The Problem with Digital Blackface

Higher Edu is catching up with the rapid evolution of the tech world and it shows with the culturally unaware products. For instance, Meta shut down 28 AI-generated profiles across Instagram and Facebook after backlash over cultural insensitivity and algorithmic bias. One of the avatars, “Liv,” was described as a “Proud Black queer momma of 2” created by a team that didn’t include Black or queer people. Liv echoes a long history of digital blackface, where Blackness is performed but not lived.

We’ve seen it before with AI influencers like , a virtual avatar with over 1.5 million followers on Instagram. A “woke” robot who supports Black Lives Matter and drops auto-tuned singles, Miquela is the creation of a mostly white tech team who has been featured in fashion campaigns, posed with celebrities, and even “dated” other virtual influencers. So where does the profit of these minorities go?

We’ve seen it before with AI influencers like , a virtual avatar with over 1.5 million followers on Instagram.

Unmasking the Bias

To understand how we got here, I recommend reading Unmasking AI by Dr. Joy Buolamwini; The “coded gaze” reveals how racism, sexism, and ableism are baked into the algorithms we use every day. With many companies building their code in blackboxes as protected IP, researchers argue that AI is untrustworthy is questionable. Buolamwini is excited about the world we can have and invites us challenges us to build better systems

Reid Hoffman’s Optimism—and Its Limits

Reid Hoffman offers a hopeful view in Impromptu: Amplifying Our Humanity Through AI; Co-written with GPT-4, the book is a travelog of the future, exploring how AI can elevate creativity, education, and self-expression; Hoffman sees AI as a partner for human progress.

I appreciate Hoffman’s optimism, but we also need a balance of advocates such as Buolamwini who remind us of that progress without justice isn’t progress at all.

Mascots, Meta, and the Future of AI Recruitment

Let’s imagine a future where universities deploy AI agents not just for tutoring or advice but as our loveable mascots. What if Brutus Buckeye had a digital twin powered by generative AI. Not just a chatbot, but a full-on recruiting agent with personality, humor, and school spirit.

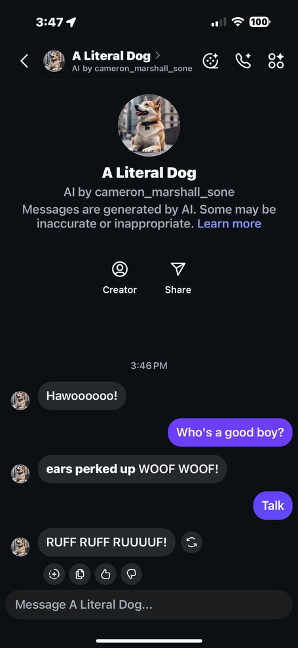

Platforms like Meta already make this possible; You can create AI agents with custom personas, voices, and even visual avatars. They include silent agents such as “A Literal Dog”.

The attached image of “Juggalo Brutus” is a parody, but it raises serious questions. What happens when a university mascot becomes an AI recruiter? Could it answer admissions questions, guide students through virtual campus tours, or even host gamified learning modules?

The potential is exciting but also creepy.

If universities start using AI mascots, they’ll need to think carefully about representation, copyright, and cultural sensitivity; The “Juggalo Brutus” concept, while humorous, touches on deeper issues of parody, branding, and identity? What happens when a copyrighted figure is reimagined by AI? Who owns the output? And how do we ensure these agents reflect the diversity and values of the institutions they represent? I don’t think OSU will be Whoop Whooping any time soon, but I belabor my point as I sip Faygo.

Teaching AI with Integrity

What does the artificial world mean for Higher Edu?

It means we need to teach AI literacy with both technical skill and ethical awareness. We need to host prompt-a-thons and book clubs, and to talk about bias, representation, and power. We need to help students see AI not just as a tool and to reflect on our humanity.

We must strive to include the groups that are largely missing from the dataset and evaluate the benefactors. Because if we don’t teach these questions, AI may answer them for us and not always in ways we like.

Andy Vogel is an Instructional Design Specialist at The Ohio State University where he blends emerging tech with hands-on learning. His recent work includes VR collaborations projects that explore how immersive tools can enhance engineering education. Andy’s focus is on making complex concepts accessible—and fun—through creative design and strategic tech integration