The short-form video wars have entered a new phase and this time; the creators aren’t human.

Meta’s “Vibes” and OpenAI’s “Sora” are AI-native platforms that generate TikTok-style content entirely from text prompts. No cameras. No ring lights. No influencers. Just prompts, models, and remix buttons.

🌀 The Rise of AI-Generated Feeds

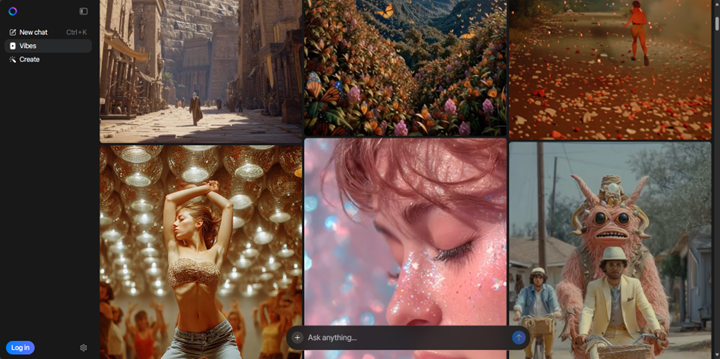

Meta’s Vibes, integrated into its Meta AI app, lets users generate short-form video collages using Llama-based models and Midjourney-style visuals. OpenAI’s Sora, on the other hand, is a standalone app built around its Sora 2 model. It features a vertical, swipe-to-scroll feed of 10-second AI-generated clips—no uploads allowed.

Random AI generated content.

Both platforms encourage remixing: you can take someone else’s AI-generated video and spin it into your own. Sora even lets you insert your likeness into others’ clips via “cameos,” with consent notifications baked in.

🔁 Remix Culture or Regurgitation?

This remixability is a double-edged sword. On one hand, it’s a powerful tool for idea generation—like a collaborative sketchpad where perspectives evolve through iteration. On the other hand, it risks becoming a feedback loop of derivative content. Critics have already dubbed it “AI slop,” questioning whether these platforms are just dopamine farms optimized for engagement rather than expression.

🧠 Creativity or Consumption?

Are we witnessing the birth of a new creative medium—or the automation of creativity itself? I’ve been experimenting with this myself. I remixed an AI-generated image into an extended video using a slowed-down version of the same audio track creating a surreal, dreamlike loop that felt both familiar and uncanny. You can check out the dystopian world complete with floating eyeball here.

In another, I edited one of my own photos and turned it into a video using Meta’s tools. The result? A jarring, artifact-laden animation that felt more like a hallucination than a memory.

I noticed there is a lack of attribution when remixing. Both muse and creator get lost in the slop.

🧩 Final Thought

As AI-generated content floods our feeds, the question isn’t just “Is it good?” It’s “Is it ours?” These tools are powerful, but we must ask whether they’re helping us create or just remixing faceless ideas in an endless loop?

Andy Vogel is an Instructional Design Specialist at The Ohio State University where he blends emerging tech with hands-on learning. His recent work includes VR collaborations projects that explore how immersive tools can enhance engineering education. Andy’s focus is on making complex concepts accessible—and fun—through creative design and strategic tech integration